Our dataset comprises over 99 video clips featuring road scenes both occluded and non-occluded pedestrians, capturing cases where occluded pedestrians become visible after several frames. It features a diverse collection of road scenes with partially and fully occluded pedestrians in both real and virtual environments.

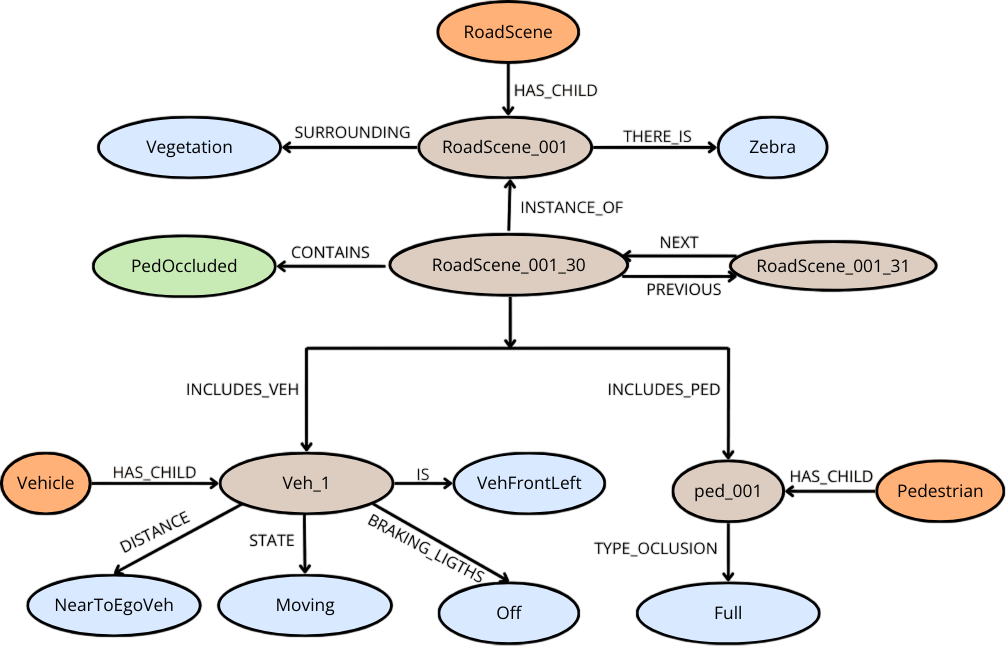

The dataset includes multiple labels that provide an overview of each road scenario and deliver frame-by-frame information about the actors in the road scene, as detailed below:

Presence of Zebra crossing

Surroundings

Number of lanes

Pedestrian scene occlusion

Vehicles data

Pedestrians data

Pedestrian detection is a critical task in autonomous driving, aimed at enhancing safety and reducing risks on the road. Over recent years, significant advancements have been made in improving detection performance. However, these achievements still fall short of human perception, particularly in cases involving occluded pedestrians, especially entirely invisible ones. In this work, we present the OccluRoads dataset, which features a diverse collection of road scenes with partially and fully occluded pedestrians in both real and virtual environments. All scenes are meticulously labeled and enriched with contextual information that encapsulates human perception in such scenarios. Using this dataset, we developed a pipeline to predict the presence of occluded pedestrians, leveraging KG, KGE, and a Bayesian inference process. Our approach achieves a F1 score of 0.91, representing an improvement of up to 42\% compared to traditional machine learning models.

@misc { occluRoads,

title= { Prediction of Occluded Pedestrians in Road Scenes using Human-like Reasoning: Insights from the OccluRoads Dataset } ,

author= { Melo Castillo Angie Nataly and Martin Serrano Sergio and Salinas Carlota and Sotelo Miguel Angel } ,

year= { 2024 } ,

eprint= { 2412.06549 } ,

archivePrefix= { arXiv },

primaryClass= { cs.CV } ,

url= { https://arxiv.org/abs/2412.06549 } }

To request access to the dataset. For any questions regarding the dataset, please contact Nataly Melo. (nataly.melo@uah.es)

The videos and annotations are released under the MIT License.